Can modern AI systems solve any problem on their own?

3AI June 27, 2023

Featured Article:

Author: Puneet B C, Data Scientist – Computer Vision, AIRBUS

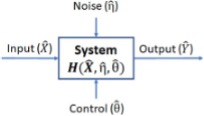

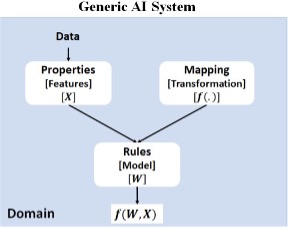

First, let’s briefly understand what AI is. Artificial Intelligence is basically an algorithm or a rule that is used to make decisions automatically. Making a decision is to generate a desired output for the given input. In order to achieve this, the algorithm learns to map the inputs to corresponding outputs through a transformation function.

Though AI has existed for decades, it is the way in which the inputs are used and the transformation function constructed by the algorithm that makes all the difference. We can split the AI era into 3 different time frames,

- In the first phase, a human had to extract useful properties called features from the data, and the human had to decide what function was supposed to be used to transform this data into the required output. During this phase, computers did not have much intelligence and were just used to compute predefined features and execute the rule engine.

- In the second phase, a human had to still extract the features from the data but the algorithm could learn the most optimal transformation function from it, provided some cues were given from the human as to what family of functions to look, for the solution.

- In the third phase, which is what we are living in now, both the features and the optimal function are automatically calculated by the machine.

Now there are 2 questions to be answered,

- Can AI solve any problem?

- Can it solve it automatically without human intervention?

Any data, irrespective of whether it represents wind velocity, temperature, blood pressure, etc is just a numerical quantity for a computer. It does not mean anything to it. A computer at its core is binary in nature and any data is stored in that format. If image data is sent to the speaker, it happily plays it without complaining but would just not make any sense. Data gets its significance, relevance, and meaning only when it is interpreted by a human and associated with a physical quantity in the real world. When a new problem needs to be solved, the computers would not know what data needs to be taken unless it is given this input directly or indirectly by a human. It is not that computers do not have the capability to solve any problem, but we do not have accurate solutions to many problems today because we as humans are yet to crack it. It is only after humans solve, that computers can take over and bring about speed, scale, and customization at an individual level.

Take health projection for instance. If we as humans have not still understood how the human body works, it would be equally difficult for computers to figure that out using human-generated data.

AI algorithms today may have mastered generating highly accurate models from data, but if the data itself is wrong, they will just produce garbage. We have still not been able to build systems that can give precision and recall of 1. As the precision or recall moves very close to 1 it will affect the other. Hallucinations in ChatGPT could be considered false positives generated by the LLMs.

For the second question, let’s assume we make an AI system independent from human intervention by automating it end to end from data ingestion → model building → inference → feedback. Now, any error that the system produces will propagate into the system unchecked and can get amplified over time. The system will finally go out of control and can produce undesired results.

So, irrespective of how much advancement AI makes, data is and will continue to be a quantity that will remain in the hands of humans. Machines do not understand the physical significance of data, humans do. Computers lack this understanding because they do not learn by interacting with the physical world and the environment around them. They just use data to find common patterns but do not have a feel for them.

But, it is surprising to see how much machines can learn just by data and we all know how powerful ChatGPT is. At the time of writing this article ChatGPT public version was in its May 12 2023 version. I asked it “If 5 clothes take 5 hours to dry in the sun, how many hours will 10 clothes take to dry?” It confidently gave an answer of 10, with a detailed explanation. In the data it was trained on, it would have encountered a lot of examples related to ratio and proportions that fall into this pattern of questioning and so it tries to answer in a similar fashion. When AI systems are trained on enormous amounts of data and given a goal to generalize on that data, they tend to learn the context behind the data and not the specifics of it. We are reaching the limits of increasing the AI system performance by sheer scaling of data and compute. Unless it learns some common sense it would become difficult to feed every information required into the system. Take pain for example. Humans know they should not hurt others because they can feel the pain and would have experienced it firsthand. How do we make a machine feel such emotions? Humans and machines have some unique abilities and we want to build systems that complement humans and not compete with us. Unless we find more efficient ways to train the AI systems the current growth will plateau soon.

Computers are very efficient in handling enormous amounts of multi-dimensional data with high speed and precision, which is clearly out of the scope of a human. Similar to how the invention of electricity and engines replaced strenuous human physical activities, computers, and AI will replace strenuous mental activities. A human would only be required to monitor or evaluate the output of an AI system and the AI system will take over all the physical and mental heavy lifting.

Current SOTA AI algorithms are just optimal in the way they learn on the given data and not really efficient. AI has still not reached its peak and the next phase of AI is yet to come. Current AI systems can neither solve any problem nor exist on their own

Title picture: freepik.com