From Analytics to Gen AI: Understanding the AI & ML foundations of LLM’s

3AI August 25, 2023

Featured Article:

Author: Nikhil Bimbrahw, Genpact

From the moment we wake up, to the moment we go back to sleep, technology is everywhere. The highly digital life we live in and the development of our technological world has become the new normal. According to the International Telecommunication Union (ITU), almost 50% of the world’s population uses the internet, leading to over 3.5 billion daily searches on Google and more than 570 new websites being launched each minute. And a surprising fact, over 90% of the world’s data has been created in just the last couple of years!

With data growing faster than ever before, the future of technology is even more interesting than what is happening now. We are just at the beginning of a revolution that will touch every business and every life on this planet. By the end of this year, at least a third of all data will pass through the cloud, and within five years, there will be over 50 billion smart connected devices in the world.

At the rate at which data and our ability to analyse it are growing, businesses of all sizes will be forced to modify how they operate. Businesses that digitally transform will be able to offer customers a seamless and frictionless experience, and as a result, claim a greater share of profit in their sectors. Considering the financial services industry – specifically banking which used to be done at a local branch. Recent reports show that 40% of Americans have not stepped through the door of a bank or credit union within the last six months, largely due to the rise in online and mobile banking.

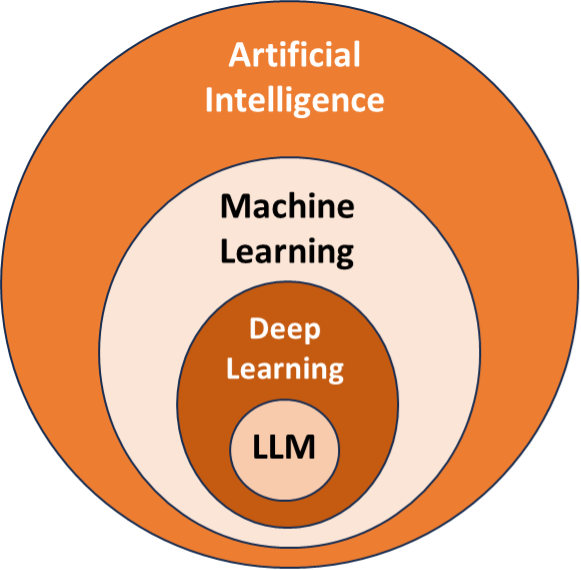

Analytics, Data Science(DS), Machine Learning(ML), Deep Learning(DL), Artificial Intelligence(AI) and Robotic Process Automation(RPA) are terms that are used to describe the cutting edge of information technology within the area of Data. They are currently playing a larger role in automating some of the routine processes in our personal and professional lives.

Media companies like Netflix are using ML algorithms to provide with better suggestions for content viewing. Dating sites like shaadi.com are using Data Science to find people the potential husband or wife they are looking for. Banks are increasingly using Deep Learning based robo-advisors to detect market patterns and enable wealth managers to make more accurate investment decisions. Google has built its entire search empire on text-mining and information retrieval algorithms. Roomba is a company making AI enabled vacuum cleaners that automatically cleans the dust off the floors without any human intervention.

When we think about products like robotic vacuum cleaners and driverless cars as a consumer, it is thrilling to see technologies like AI playing a positive role in our lives. These concepts have tremendous potential in how they can change our professional life! The future is certainly bright when it comes to technologies impacting the way we do our work.

That being said, when we talk about AI, Analytics, LLM’s, etc., a number of people use these terms interchangeably. In the next few pages, I have tried to explain the perspective based upon my experience.

Let us explore these concepts a little more.

Analytics

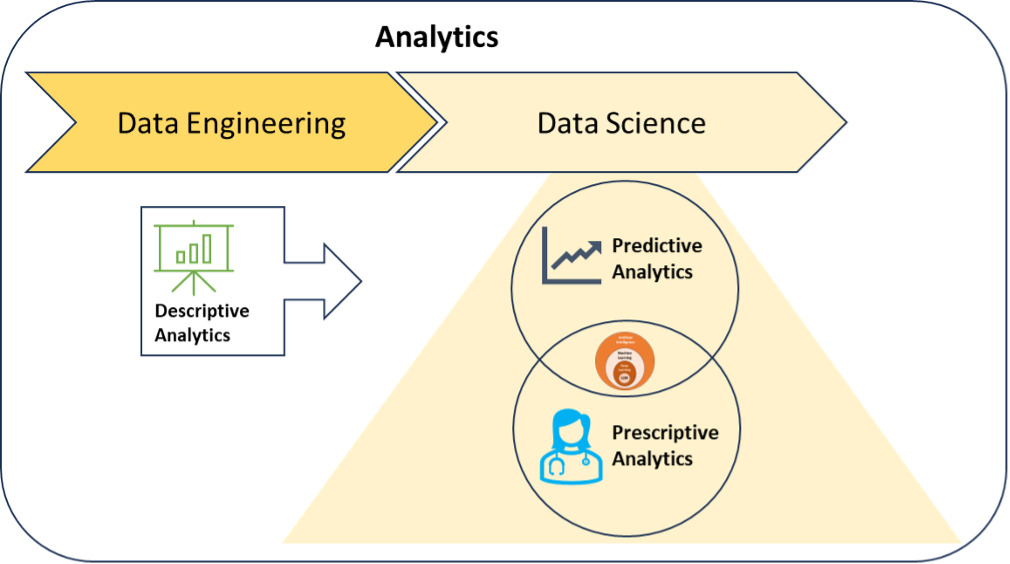

Data Analytics is the systematic computational analysis of data or statistics. It is used for the discovery, interpretation, and communication of meaningful patterns in data, on the basis of which, business decisions can be taken. It also entails applying data patterns towards effective decision making. Once information from any analytic model is obtained, it can be used to train any machine to learn and predict what actions it can take. In its earliest form, Analytics has been available to consumers through Reports and Dashboards. It has evolved from a text-only approach to one that includes multiple graphs and charts in order to convey different messages. It has been called with many names in the past including Data Visualization, Business Intelligence (BI), Management Information Systems (MIS), and now, with the inclusion of unstructured data/Big Data, it has evolved to Data Science.

As part of this evolution, the term Analytics has been used to encompass different types of Analytics-

- Descriptive Analytics – Identifying what has happened in the past through Key Performance Indicators(KPI’s) and Metrics in reports, e.g.- Annual Reports. Google Analytics is an example of a tool for descriptive analytics, providing metrics like page views to evaluate past performance.

- Diagnostic Analytics – A subset of Descriptive Analytics, that also provides a further level of details into the ‘why’ of identified trends through root cause analysis. In reality, a lot of the analysis is driven by people.

- Predictive Analytics – Forecasting what will happen next, typically through the projection of past trends into the future using mathematical and statistical techniques like Probability and Linear Regression, e.g.- projecting next years sales based upon the existing pipeline as well as trends from last year

- Prescriptive Analytics – Recommending actions in the future through the use of Simulation and Machine Learning techniques to explore the correlation and causation of various factors impacting the final outcome, e.g.- Identifying the shortest route for a delivery person to take, or Optimization of limited shelf space in a supermarket to maximize profits

In order to implement any type of Data Analytics, two key steps need to be performed-

- Data Engineering – this involves the extraction of data from multiple sources through the use of ETL (Extract, Transform, Load) technologies, storing the data in a Data Warehouse or Data Lake, and transforming it into a format that is ready for analysis.

- Data Science – this involves the application of statistical techniques, including AI/ML algorithms in order to derive insights from the data. The algorithms used in Predictive or Prescriptive Analytics fall into the category of Data Science.

The reality of Analytics projects is that Data Scientists or Data Analysts spend 80% of their time in Data Engineering activities focused on the creation of the appropriate data set, and about 20% of their time in Data Science activities focused on modelling.

Artificial Intelligence

Artificial Intelligence is applied when a machine mimics cognitive functions as in human beings, e.g.-learning. Its elaborative definition given by Andreas Kaplan and Michael Haenlein explains AI as “a system’s ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation.” Examples include understanding human speech (as found in Apple’s Siri and Microsoft Cortana).

There are 2 broad types of AI-

- Weak AI – designed to perform specific tasks only. All AI applications currently fall into this category.

- Purely Reactive – Chess simulators fall into this category where the machine makes the decision based upon the observed moves

- Limited Memory – This includes Machine Learning as well as all other use cases that rely on the data in their ‘memory’ in order to make decisions.

- Strong AI – designed to replicate human intelligence. This is largely theoretical as of now.

What makes these intelligent personal assistants in smartphones unique is the ability of technology to understand human speech. Based upon several years of research into using Machine Learning algorithms from the family of Natural-Language Processing (NLP) and heuristics, it is certainly a far cry from previous generations of technologies that relied upon non-verbal forms of human interaction. Microsoft’s Kinect, which provides a 3D body–motion interface for the Xbox 360 and the Xbox One uses analytics algorithms that come from the family of Simultaneous Localization and Mapping (SLAM), the same type of algorithms used by NASA’s Mars Rover.

Machine Learning

The process of utilizing algorithms used by robots or machines to learn (i.e., grow their knowledge base) and improving performance through experience is called Machine Learning (ML). These are three main types of ML:

- ‘Supervised learning’ or learning through a process of training, where the machine is supervised by humans. The best example to understand this is Amazon providing purchase recommendations based on our browsing and order history, as well as the purchase patterns of others. The suggested videos on YouTube is another such example. Supervised machine learning applications include image-recognition, media recommendation systems, and spam detection. All Regression and Classification algorithms fall into this category, as does Neural Networks.

- ‘Unsupervised learning’ is essentially learning through pattern recognition, where the machine identifies the patterns in the data. The machine then clusters the data into segments. Unsupervised machine learning applications include things like determining customer segments in marketing data, and medical imaging. Clustering and Association algorithms fall into this category.

- Reinforcement/Semi-supervised Learning is where the machine learns by interacting with the environment in which it is placed. Some applications of reinforcement learning include self-improving industrial robots, automated stock trading, and bid optimization for maximizing ad spend.

Deep Learning

Deep learning (DL) is a subset of machine learning that is primarily a derivative of the Neural Networks family of algorithms and thus attempts to emulate human inderstanding. However, it also incorporates techniques from reinforcement learning. Deep learning algorithms are able to ingest, process and analyze vast quantities of unstructured and structured data to improve over time without constant human intervention. Some practical applications of deep learning currently include developing computer vision and facial recognition. But the one area which has seen the most traction in 2023 has been the area of natural language processing through the family of ‘Large Language Model’ algorithms.

Reinforcement Learning is a category of Artificial Intelligence models that focuses on improving the knowledge of a particular topic through repeated iterations of teaching an algorithm the right and wrong way of interpreting information. Large Language Models are a type of Reinforcement Learning applied to Natural Language Processing(NLP) where the objective is to develop a better understanding of a language.

Large Language Models

Machine Learning is a way to create a certain behaviour from a computer by taking in data, forming a model, and then executing the model. But what is a model? A model is a simplification of some complex phenomenon. For example, a model car is just a smaller, simpler version of a real car that has many of the attributes but is not meant to completely replace the original. A model car might look real and be useful for certain purposes, but we can’t drive it to the store. Just like we can make a smaller, simpler version of a car, we can also make a smaller, simpler version of human language.

We use the term large language models because these models are very large from the perspective of how much memory is required to use them. The largest models in production, such as ChatGPT, GPT-3, and GPT-4 are large enough that it requires massive super-computers running in data center servers to create and run.

Currently, we hear a lot about things called GPT-3 and GPT-4 and ChatGPT. GPT is a particular branding of a type of large language model developed by a company called OpenAI. GPT stands for Generative Pre-trained Transformer. Here is the breakdown:

- Generative. The model is capable of generating continuations to the provided input. That is, given some text, the model tries to guess which words come next.

- Pre-trained. The model is trained on a very large set of general text and is meant to be trained once and used for a lot of different things.

- Transformer. A specific type of self-supervised encoder-decoder deep learning model with self-attentive properties that make it good at language modeling. In simple terms, it means that the model is very good at learning the construction of sentences.

Within the context of Chat GPT, LLM’s and Generative AI(Gen AI) are used interchangeably since Chat GPT is a Generative Model.

Large Language Models are trained on billions of examples of information scraped from the internet. This includes books, blogs, news sites, wikipedia articles, reddit discussions, social media conversations. Essentially an LLM is trained to produce text that could have appeared on the internet.

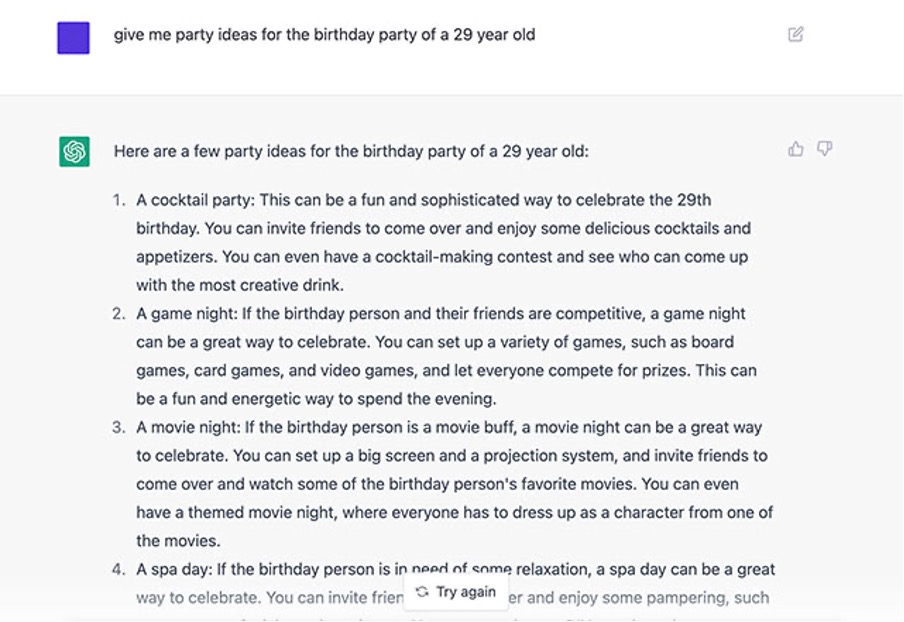

Here is an example of ChatGPT in action:

Company Spotlight: OpenAI

OpenAI is an American artificial intelligence (AI) research laboratory consisting of the non-profit OpenAI Incorporated and its for-profit subsidiary corporation OpenAI Limited Partnership. OpenAI conducts AI research with the declared intention of promoting and developing friendly AI. OpenAI got a $1 billion investment in 2019 and a $10 billion investment in 2023 from Microsoft.

In 2020, OpenAI announced GPT-3, a language model trained on large internet datasets. GPT-3 is aimed at natural language answering of questions, but it can also translate between languages and generate improvised text.

In 2021, OpenAI introduced DALL-E, a deep learning model that can generate digital images from natural language descriptions.

In December 2022, OpenAI received widespread media coverage after launching a free preview of ChatGPT, its new AI chatbot based on GPT-3.5. According to OpenAI, the preview received over a million signups within the first five days.

Google announced a similar AI application (Bard), after ChatGPT was launched.

On February 7, 2023, Microsoft announced that it is building AI technology based on the same foundation as ChatGPT into Microsoft Bing, Edge, Microsoft 365 and other products.

On March 14, 2023, OpenAI released GPT-4, which is being extensively used by the general public for a variety of use-cases.

Title picture: freepik.com