AI Operations: Think Software Development, not Data Science

3AI May 26, 2023

Featured Article:

Author: Kuntal Hansaria, Associate Partner – AI, Analytics & Digital, IBM

AI Governance includes aspects of Explainability (explaining how a model is working) & AI/ML Operations (scaling model development, management & deployment).

While Explainability gets lot of attention, aspects of AI/ML Operations are often ignored. However, without AI Operations, an organization can never scale AI.

Background

Across industries, the ways of working for AI teams vary. Data science team often develops a model, then hands them over to IT for deployment. Also, these models are developed using a mix of technologies / languages, ex. SAS, R, Python, etc. in notebooks that are stored in individual machines or a central repository / server. Usually, the process is democratized with an intent to give freedom to data scientists and enabling them to build the best model, irrespective of technology or technique.

This scenario works well when the number of models being developed are limited or are being developed by a small, cohesive team at one location. It also works when the models involve batch / offline scoring for deployment. This is common in financial services and retail industries, lesser in industrial and product companies.

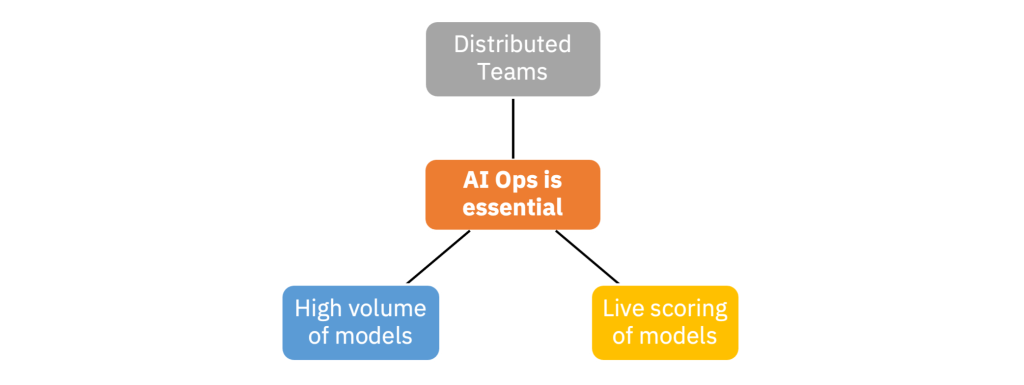

However, when the number of models being developed / maintained becomes large or they start interacting with live apps, websites or decision-making systems, there is a need to introduce tenets of AI governance and make it all scalable. Presence of distributed, global teams further adds to this need for AI Operations.

AI Operations

AI Operations has 3 aspects:

- AI Deployment

- AI DevOps

- Change Management

AI Deployment

Once models are developed, they need to be deployed to add value to business. Deployment can be offline where we score a file using the model and submit it for a campaign, etc. or online / live, where we convert the model to an API delivering live responses to requests coming from an application or a user.

In a live environment, the volume of responses can vary over time; hence, the API & model needs to be containerized (converted to a modular app) so that more copies of it can be launched to cater to more incoming requests (and scaled down when incoming requests decrease). All of this is often handled by IT team in an organization. The data science team submits their model notebook with development code and model’s binary version (Pickle file, etc) to the IT team. Thereafter, IT team converts the development code into a pipeline, converts the binary model to an API and deploys it on a server / serverless environment.

If data science team is mindful of deployment steps and incorporates best practices during development, they can save considerable re-engineering effort and accelerate deployments overall. They need to start thinking of a model as an app or software package to make this transition natural.

AI DevOps

Data scientists love their Jupyter notebooks, playing with models, trying new tools & techniques. They often hate adding comments, annotations, version control, repository management, etc.; things that are second nature in software development.

A distributed team of data scientists often end up duplicating efforts in data cleaning & transformations. Many create their own individual steps and methods that are non-standard. Code versions are not maintained, lineage of models is not maintained. When the number of models is high, maintaining history, versions and compliance statuses is difficult. It all could become unmanageable soon.

If the data science team adopt a software development mindset where they use a repository management tool and follow the tenets of software development & CI/CD processes, it could make the management of these assets much better. Controls can be set; no individual can build their own methods and even if they do, it cannot make to production without required verification and approvals. Model development code can be broken down into modules and maintained as libraries that the data scientists “import” into their notebooks, like any other python library.

The model development process can be broken down into modules / snippets and improved upon over time. Modular code snippets can be developed & maintained for data pull, cleaning & transformations. Similarly, different modelling techniques could be converted into functions that are called by team members. Their outputs and reports can be standardized so that the team decides on efficacy of a model using pre-defined parameters and visuals. This will ensure that there is standardization in techniques used and in ways model parameters and related outputs are assessed. New techniques and ways can be brought in as a “challenger” to current “champion”.

Change Management

AI Deployment and AI DevOps often need a change in mindset of the data science team. They need to learn aspects of software development and take ownership. This takes discipline, top-down directive and most importantly, effort on the part of everyone to change their ways of working and adopt new methods.

It needs to start with incorporating the changes in day to day working and processes of the team. For ex: If someone is not submitting their model through a repository management tool with adequate documentation, it should not be accepted. Deviations from standards need justification Leaders also need to learn how to check and approve / disapprove team’s submissions on CI/CD tools.

Journey

Transition and adoption of aspects related to AI Operations cannot be done overnight. It’s a technical cum change management journey.

Getting there includes:

- AI Ops Champion(s)

- Repository management

- Assessment & approval process

- Event / Learning interventions

- Model Deployment guidelines

The above list is indicative and not exhaustive. This could look different based on organizational complexity, team dynamics and sharing of roles and responsibilities.

AI Ops Champion(s)

You need software developer / group of software developer(s) among your data scientists / broader team. These could be folks who have a strong background in python and have developed or been involved in developing software products / apps in their past. They will lead the charge in driving this change.

Repository Management

Most organizations already have a tool and methods that are being used by software / IT teams. Hands-on training on it for all data scientists is essential.

Assessment & Approval process

Most organizations have a process on how they will evaluate and approve models. In some cases, this is being done by another team. This needs to happen through the repository management tool or using a workflow that involves checking the completeness of submission on this tool.

Event / Learning Interventions

Events like hackathons, code competitions, etc. help accelerate the learning process and adoption by team. In a hackathon, team members could convert their current models into python and submit using CI/CD guidelines. If they could convert one project, the rest is easy.

Model deployment guidelines

Even when model deployment is not with the data science team, it helps if they “think” deployment while developing. Using “pipelines” in python, making the code modular, using standard libraries, etc. are best practices that help deployment.

Conclusion

Given the advent of new AI tools, explosion in use cases, one can no longer ignore AI Operations. While some tools can make it relatively easy to handle the Explainability issue, AI Operations needs much more effort, initiative, discipline, and learning. AI Governance cannot be effective anywhere without maturity in AI Operations.

Title picture: freepik.com