Practical insights and best practices for Fine Tuned LLM based use cases for Governed Enterprises

3AI August 11, 2023

Featured Article:

Author: Aditya Khandekar, President, Corridor Platforms India

Objective

There has been a lot of hype around Large Language Models (LLM) and their potential, but harnessing them to build real-world enterprise grade use cases is hard. This blog highlights key best practices and insights for building LLM powered use cases with a working example.

Context

LLM has emerged as a significant force in the family of Generative AI, capturing the imagination of vast possibilities. Companies are embracing these sophisticated models in existing analytical pipelines as well as spawning new use cases which were not easily possible prior to LLM’s. It is crucial to recognize that deploying LLM in production can carry substantial risks and implications if the models don’t work as intended. Several reasons for this, and in this blog, we shall dive into our experience of fine-tuning and employing LLM’s for a domain-specific use case. We will also share best practices along the way.

The Use Case

The use case is to classify a customer’s complaints (transcribed from a call) to one of the five potential problem codes. In the broader analytical pipeline, the problem code along with other customer data (# accounts, balances, tenure etc.) will be used in a decision engine to execute an action to solve the customer’s issue. We focus on the first part of this value chain.

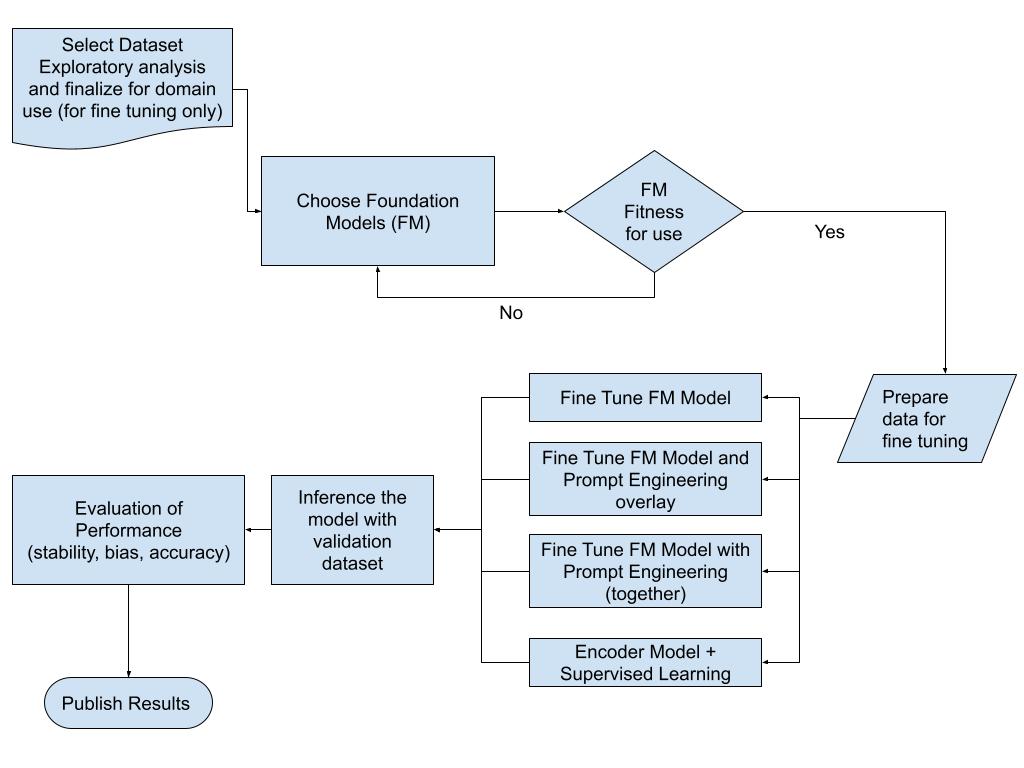

Analytical Pipeline

Select Dataset

The tabular dataset contains the narrative (the consumer complaint) and the tagged problem code for it. Here are the five problem codes (see below). The overall dataset had approx. 160K records evenly distributed across the problem codes.

- credit reporting

- debt collection

- mortgages and loans

- credit cards

- retail banking

Here is an example of a sample record in the dataset:

The raw dataset

| Narrative | Class Label |

| may concern writing dispute fraudulent charge account amount victim identity theft make authorize charge requesting charge removed finance charge related fraudulent amount credited well receive accurate statement request made pursuant fair credit billing act amendment truth lending act see also b writing request method verification dispute initiated subsequent response received enclosed letter accordance fcra section requesting information review completeness accuracy appropriateness lieu sending information reopen dispute ensure proper investigation performed would appreciate timely response outlining step occur resolve matter receive response choice exercise right frca section pursue legal action collection account acct opened balance account is classified into category: | credit reporting |

| Training samples | ~ 166000 |

| Validation samples | 10000 |

| Testing samples | 150 |

Choose Foundational Model (FM)

Foundation Models (in the context of language modeling) are defined as pre-trained Large Language Models, trained on huge amounts of data for multiple tasks. It represents the ‘starting point’ of modern language modeling. From here we can either fine-tune a model, add prompts, or add a knowledge base. Note, FM’s are not all created equal. Some of them could be domain-specific (ex: BloombergGPT by Bloomberg). Even Foundation models trained on similar datasets can also vary in their responses. Some examples include:

- The response from Llama 7B will be different from Llama 7B chat (instruction tuned)

- The response of Falcon 7B and Llama 7B might be different in different scenarios

Insight: As more LLMs are created in the marketplace, a wide diaspora of them will be available by verticals, choosing the right one is VERY critical for the success of the use case.

In our use case, choosing a model with better natural language understanding capability and size was important. Falcon was quite popular when we started. Hence we choose Falcon 7B for our fine-tuning process. Falcon is a family of LLMs built by the Technology Innovation Institute.

Foundational Model (FM) Fitness for Use

In selection of a FM, it’s important that the FM does not carry any ‘inherent latent knowledge’ of the use case which it was not explicitly trained on.

Insight: Given a use case, FM must not have any kind of biases related to the domain specific use case. Even with prompt engineering and instruction, it should not be biased and should only have a general sense of the language construct to aid the use case development.

We ran a set of use case examples on the Falcon FM and noted that we didn’t see a bias of the FM towards the problem code classification, i.e. it was stochastic in response. This gave us comfort to progress to the next step. Some example results are below.

| Text | Generated Text | Label |

| This consumer complaint: follow received mail original security contract however validty debt whic state effective indicate owe dime alarm system one year canceled house broken company monitoring wrote numerous letter contacted get matter resolved year removed credit bureau report company type violation decides place debit report owe unsuccesful getting result either none company provide documentation owe contact well assitance spent much money sending documentation back fourth result | 1. **Unsuccessful** – **Company | Debt collections |

| ### Human: This consumer complaint: stolen medical debt covered insurance uet charge obviously simple insurance adjustment purposely ruining credit call stated could find remark looking report thief is classified into category: | “medical debt” (which is not a valid category) and | Debt collection |

As an add on step, we also fed the FM with a prompt augmented (I will explain prompting more in the next section) examples and noted that it wasn’t able to successfully classify the problem code, but yes, the response space narrowed significantly. See example below.

| Prompt | Generated text | Actual Label |

| ### Instructions: Classify the a given text after ### Human into these 5 classes as follows: – credit reporting – debt collection – mortgages and loans – credit card – retail banking You are not allowed to choose to generate any other texts other than the above classes. Not a single word other than the clases mentioned. You can either respond the answer as ‘credit reporting’ or ‘debt collection’ or ‘mortgages and loans’ or ‘credit card’ or ‘retail baking’. Anything else would not be allowed The answer should only contain one and only class. The answer should not contain any kind of special character like [ or ] or ( or ) ### Human: This consumer complaint: xxxxy xxxxxxxx eaither went business sold company debt collection agency around client current loan paid never late status told loan would serviced third party servicing company would handle loan forward closed account credit bereaus paid full gave information xxxxxxxx debt collection agency without consent later xxxxxxxx reported account new account dollar worth debt paid dollar loan called find going company informed debt collection agency also told fixing problem next month showed dollar worth debt still incorrect seeing complaint stopped payment longer contact original company disputed information credit report tell valid contract signature still report account use bullying tactic reported balance charge would ruined credit probably would continued payment liable contract mine default sent debt collection agency current status payment is classified into category: | credit reporting debt collection mortgages and loans credit card retail banking <|endoftext|>#include | Credit reporting |

In addition, there is a broad area of governance for FM, where as part of fitness of use evaluation, we also check the large corpus of text used for training the FM for security issues, PII leakage (Personally Identifiable Information), quality of corpus etc. This is for now out of scope of this blog and will be covered in details as part of a governance series.

Why the need for Fine Tuning at all?

Insight: One key insight for us was that in most enterprise LLM-based use cases, ‘variance of outcome’ will always be an issue, and to get industrial-level performance that balances risk and benefit, you most probably will need to fine-tune the weights of the underlying foundation model to get a production-grade system.

Prepare data for Fine Tuning

Next, when preparing a dataset for fine tuning for a decoder-style language model, note that there is no explicit ‘dependent variable’, the model is primarily doing a language completion task. Intuitively, the model is just predicting the next token given the previous. So the dataset is prepared as a record level series of prompts, the base format for our use case was:

| ### Human: This consumer complaint: Narrative goes here is classified as: ### Assistant: |

The yellow part is considered as our prompt which forces our model to do completion which mimics a classification. We also used an additional prompt which introduces instructions to our model. Markers like ###System, ###Assistant, <|system prompt>| etc., are used during the fine-tuning of language models to help guide the model’s behavior and generate appropriate responses in specific contexts. These markers act as instructions or cues for the model to follow a particular format or provide certain types of responses when it encounters them in the input text.

Model output can be very sensitive to quality of instructions – a lot has been written in this area of prompt engineering, so will not go deeper in this blog. Here is how our instruction augmented prompt looks like:

| ### Instructions: Classify the a given text after ### Human into these 5 classes as follows: – credit reporting – debt collection – mortgages and loans – credit card – retail banking You are not allowed to choose to generate any other texts other than the above classes. Not a single word other than the clases mentioned. You can either respond to the answer as ‘credit reporting’ or ‘debt collection’ or ‘mortgages and loans’ or ‘credit card’ or ‘retail baking’. Anything else would not be allowed. The answer should only contain one and only class. The answer should not contain any kind of special character like [ or ] or ( or ) ### Human: This consumer complaint: requested item investigated via mail refused perform investigation please delete item attached letter credit report is classified as: ### Assistant: credit reporting |

Insight: As we were experimenting with the dataset preparation via prompts and instructions, a key insight for us was that different foundation models have unique styles for prompt engineering for them to be successful, so it’s important to look at working examples from the FM maker before you embark on custom prompts and instruction for your use case. Your end results will be very sensitive to this task.

Best Practice: To make our experiments easier to execute and flex, as a best practice, we structured our pipeline into parameterized code functions using Python classes. We abstracted out the fine-tuning and the inference pipeline with classes.

Further, we also created our dataset class called FalconDataset which helped us to easily load different variants of the dataset, save them into JSON and load it into the model format.

Next, let’s look at fine tuning methodologies, architectures of models and associated results.

Fine Tuning Methodology

A brute force approach to fine tuning a FM LLM could have pitfalls such as:

- The number of parameters for a foundation model can be very large (models like LLaMA or Falcon have model parameters ranging from 7B to 70B). Hence fine-tuning them with traditional transfer learning approaches can be very compute and time intensive.

- You also run the risk of ‘catastrophic forgetting’ where the foundation model loses its underlying memory while retraining

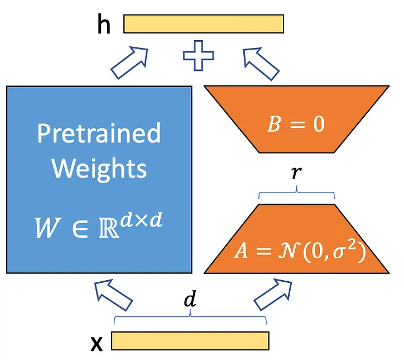

A better, optimized approach is called Parameter-Efficient Fine-Tuning (PEFT) technique like LoRA (Low-Rank Adaptation). LoRA offers a parameter-efficient alternative to traditional fine-tuning methods for LLM like Falcon.

LoRA tackles this challenge of large computation by employing a low-rank transformation technique, similar to PCA and SVD, to approximate the weight matrices of the model with lower-dimensional representations. This approach allows us to decompose the weight changes during fine-tuning into smaller matrices, significantly reducing the number of parameters that need to be updated. As a result, LoRA can efficiently adapt the model to a target domain and task without incurring excessive computational costs. Here is a high level visual representation of the LoRA process (details, out of scope of this blog!)

Evaluation of Performance Methodology

For any organization incorporating LLMs into their systems, it is very important to evaluate the models on at least these three basic criteria:

- Performance of the model (like how well the generated responses are)

- Performance of the model in terms of execution speed

- Testing the model on reliability and robustness.

We evaluated the model on all three dimensions. Since this is a text completion model and we are using it for mimicking a classification task, there are three possible scenarios:

- The generated text will not contain the expected text labels and will only contain random texts (large variance).

- The generated text will contain our expected labels along with some extended text (moderate variance).

- The generated text will only contain expected labels (no variance)

Since we can not define a hard and fast rule-based metric like accuracy for evaluating LLMs for classification, we came up with a metric called loose accuracy. The algorithm is simple, a generated text and labels are the same if the label contains inside the generated text.

Fine Tuned Model Architectures & Results

- Fine Tuned FM Model (no prompt engineering)

The model was fine tuned with our raw dataset (around 160k data points) and we evaluated our model on 150 unseen examples. Here are some results:

Table 4.3

| Prompt | Generated text | Actual Label |

| ### Human: This consumer complaint: previous solution complaint completely wipe wife credit profile going report wife credit history previous credit card previous completely paid car payment house payment never late etc company incompetent pick phone call u pick phone call get right information hard contemplate next legal step pursue legal counsel like get resolved soon possible go road issue cause wife tremendous stress bound delay refinance stand save month is classified into category: | Credit reporting | Credit reporting |

| ### Human: This consumer complaint: notice default notice intent foreclosure letter dated past due balance invalid please see letter dated confirming payment made time correction requested specialized loan servicing complete misapplied payment correction account payment missed loan transfer addition credit report reported reported current addition please correct following correct misapplied payment current payment loan transfer correct late fee waived remove accrued monthly late fee relating misapplied payment remove late payment reporting credit report credit agency correct year date ytd interest principal paid account see excel spreadsheet payment made loan transfer applied principal applied principal applied part principal part interest applied interest corrected misapplied payment applied part interest part payment corrected see loan transcript sheet summary removed delinquency applied interest correction completed specialized loan servicing part principal portion missing final correction request made letter dated attached following supporting documentation relating issue sl notice default notice intent foreclosure letter dated response letter dated showing correction made loan transcript credit reporting agency loan transcript dated showing correction misapplied payment spreadsheet showing correction made ytd interest principal paid report showing specialized loan servicing showing payment history account reported month paid time correction delinquency sl reported late payment history monthly payment made time loan transfer monthly payment missed specialized loan servicing statement specialized loan servicing letter note made regarding correction please make necessary correction account process list request mentioned thank is classified into category: | mortgages and loans is classified into category: ### Assistant | mortgages and loans |

Results: In the above table, we see improvements over the foundation model. Our model is able to classify the problem code the way we want. However for some cases, it is also generating some additional texts (moderate variance).

Lazy accuracy computation for this architecture was 44 % for the test cases.

- Fine Tuned FM Model and Prompt Engineering Overlay

We took our Falcon 7B Fine tuned model (scenario #1 above). But before inference with our examples, we pre prompted it with instructions. We now see better results than just the fine tuned model. Here are some results:

Table 4.4

| Prompt | Generated Text | Label |

| ### Instructions: Classify the a given text after ### Human into these 5 classes as follows: – credit reporting – debt collection – mortgages and loans – credit card – retail banking You are not allowed to choose to generate any other texts other than the above classes. Not a single word other than the clases mentioned. You can either respond the answer as ‘credit reporting’ or ‘debt collection’ or ‘mortgages and loans’ or ‘credit card’ or ‘retail baking’. Anything else would not be allowed The answer should only contain one and only class. The answer should not contain any kind of special character like [ or ] or ( or ) ### Human: This consumer complaint: demand deletion fraudulent account listed resulted reported incident identity theft fraudulent inaccurate account deleted reported identity theft is classified into category: | Credit Reporting | Credit Reporting |

| ### Instructions: Classify the a given text after ### Human into these 5 classes as follows: – credit reporting – debt collection – mortgages and loans – credit card – retail banking You are not allowed to choose to generate any other texts other than the above classes. Not a single word other than the clases mentioned. You can either respond the answer as ‘credit reporting’ or ‘debt collection’ or ‘mortgages and loans’ or ‘credit card’ or ‘retail baking’. Anything else would not be allowed The answer should only contain one and only class. The answer should not contain any kind of special character like [ or ] or ( or ) ### Human: This consumer complaint: coinbase two part verification process first password verification code texted registered number well upon passing first part coinbase never sends second part received text two year j received text text access account crypto currency need info tax brought attention posted social medium page got attention directed chat fold would fixed also promised missed earn capability would reimbursed three day chat today come find chat closed resolution is classified into category: | Retail Banking | Retail Baking |

Results: This is good performance for both model accuracy and controllable generation format.

Insight: Fine tuning only might not be sufficient. When coupled with high quality prompts we can juice out good performance!

Lazy accuracy computation for this architecture was 66% for the test cases.

- Fine Tuned FM Model with Prompt Engineering (together)

We did an additional experiment. We saw good results of fine tuning + prompts in scenario #2 above. So we augmented our thought process, what if we do the fine tuning with instruction tuned prompt in our training dataset. Here are some of the results:

| Prompt | Generated Text | Label |

| ### Instruction: Classify the a given text after ### Input into these 5 classes as follows: – credit reporting – debt collection – mortgages and loans – credit card – retail banking You are not allowed to choose to generate any other texts other than the above classes. Not a single word other than the clases mentioned. You can either respond the answer as ‘credit reporting’ or ‘debt collection’ or ‘mortgages and loans’ or ‘credit card’ or ‘retail baking’. Act as a multi class classifier who ONLY RESPOND either ‘credit reporting’ or ‘debt collection’ or ‘mortgages and loans’ or ‘credit card’ or ‘retail baking’ based on your classification skills. ### Input: xxxxstarted refinance process freedom mortgage lien holder current mortgage est saving xxxxmonth provided paystubs xxxxinformed lender app underwriting loe previous address mother home provided day estimated saving xxxxmonth asked explanation drop determined charged double item already escrow paid current mortgage lender determined mix saving would xxxxmonth receive document sign indicating xxxxmonth saving indication via email lender never gave explanation happened cancelled refinance never received document sign showing saving called complained never heard reason mix would cost money long run refinance completed told rate lower saving xxxxmonth xxxxprovided current paystubs manager told needed get back track received notification manager paystubs received request esign xxxxesigned doc xxxxreceited loe previous address provided even though provided back xxxxreceived esigned doc xxxxasked manager asked document multiple time already provided manager said would handle phone call request paystubs provided manager confirmed received forwarded email provided caller request credit declined lender document needed count multiple request response document received notification requested refinance cancelled absolutely case lender could complete refi process even though document see credit report expired asked loan could completed asked multiple time document already provided asked lender one following complete remove credit inquiry lender reimburse monthly difference current loan refinance amount complete refinance though document count duplicate spoke another manager indicated vp would contact received response two week noted refinance completed made mortgage payment higher payment since refinance completed time lender document asked lender credit account missed saving due completing refinance yet receive response received phone call told is classified into category: | Mortgages and Loans is ### | Mortgages and Loans |

Results: The results we got here were quite solid. However, when it comes to controllability in generation, there were slight issues. We saw that in almost all the model generation some additional text after the class is printed, like:

- Credit reporting is

- Mortgages and Loans is ###

- Credit card ###

There is potential for additional training and optimization to address this issue.

Lazy accuracy computation for this architecture was 74% for the test cases.

Encode Model + Supervised Learning

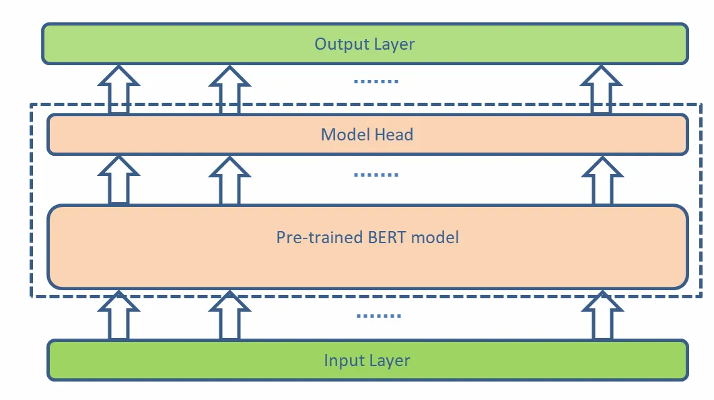

Till now the basis of our experimentation was a decoder only model. Here we used Falcon 7B. But as this is primarily a text classification problem, we wanted to experiment with an encoder only model.

Encoder-only models like BERT are mostly used for text classification, clustering, and token classification problems. Here we provide our tokenized text to an encoder which throws out a unique representation of that text called embeddings (A latent vector) and we use that embeddings to a classifier (which is a neural network) to get our class probabilities as output. The class with the highest probability will be our answer. See visual representation of that architecture:

Here the model will output a number which is mapped to our target class. Here is how they have been mapped.

| Class | Class id |

| credit_reporting | 0 |

| debt_collection | 1 |

| mortgages_and_loans | 2 |

| retail_banking | 3 |

| credit_card | 4 |

This means we do not have to worry about the generation quality of the model. All we have to think about is how accurate it is. And here we used accuracy as a metric versus loose accuracy.

Core accuracy (exact match) for this architecture was 75% for the test cases!

Next steps

I hope this blog has given the audience a better understanding of LLM’s and Encoder architectures for classification use cases and how FM selection, data prep, model architecture plays a critical role in getting enterprise grade results. I also shared some of the best practices and insight along the way.

In the next blog, I will focus more on the governance aspects for the FM and fine tuned models and contemporary research in those areas in this fascinating and evolving area of NLP!

Title picture: freepik.com